Idea

Apply point-wise spatial attention mechanism to scene parsing.

Method

Let be the newly aggregated feature at position , and be the feature representation at position in the input feature map , then we have the following bi-directional propagation formula:

where enumerates all positions in the region of interest associated with , and represents the relative location of position and

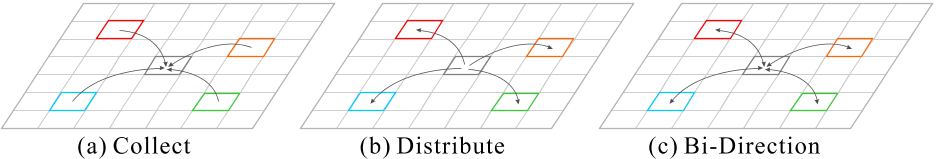

Bi-Direction Information Propagation

For the first term, encodes to what extent the features at other positions can help prediction. Each position collects information from other positions. For the second term, denotes the importance of the feature at one position to to features at other positions. Each position distributes information to others.

For the first term, encodes to what extent the features at other positions can help prediction. Each position collects information from other positions. For the second term, denotes the importance of the feature at one position to to features at other positions. Each position distributes information to others.

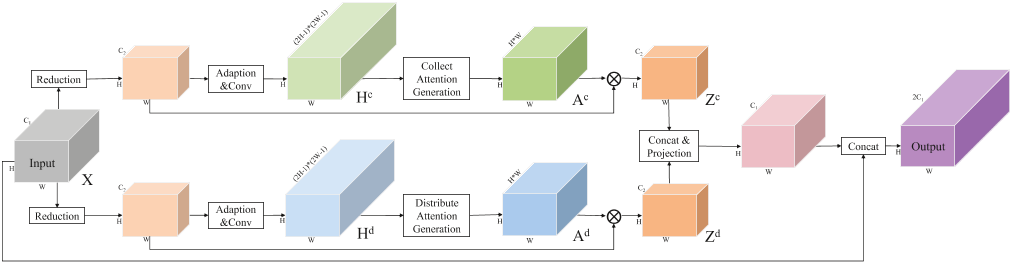

Specifically, in this model both and can be regarded as predicted attention values to aggregate feature , rewriting the formula above as

where and denote the predicted attention values in the point-wise attention maps and from collect and distribute branches, respectively.

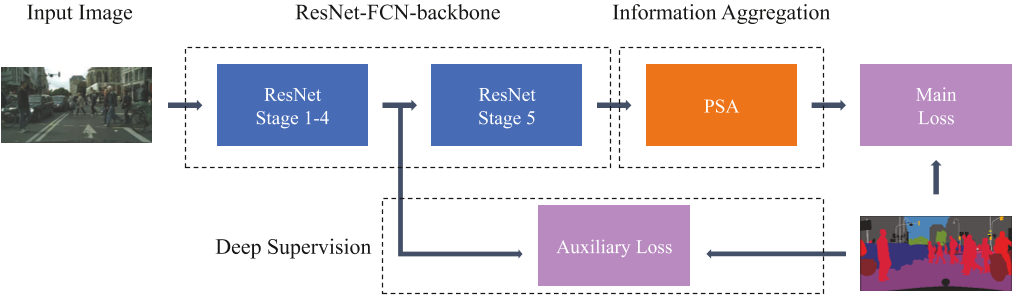

Architecture

PSA Module

Attention Map Generation

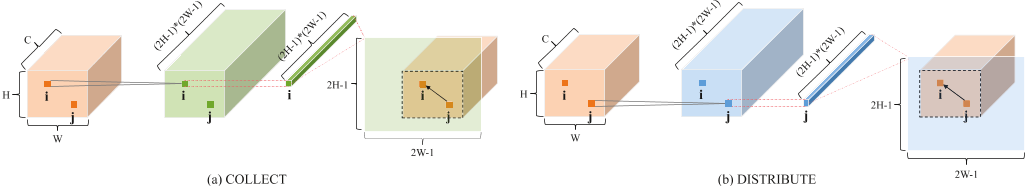

In the collect branch, at each position , with -th row and -th column, we predict how current position is related to other positions based on feature at position .

In the collect branch, at each position , with -th row and -th column, we predict how current position is related to other positions based on feature at position .

Specifically, element at -th row and -th column in the attention mask (i.e. ) is

where indexed position in rows and columns.

Model