Motivation

- The imbalance of foreground objects and background objects is a key problem in detection model training

- OHEM automatically select hard examples to solve this issue

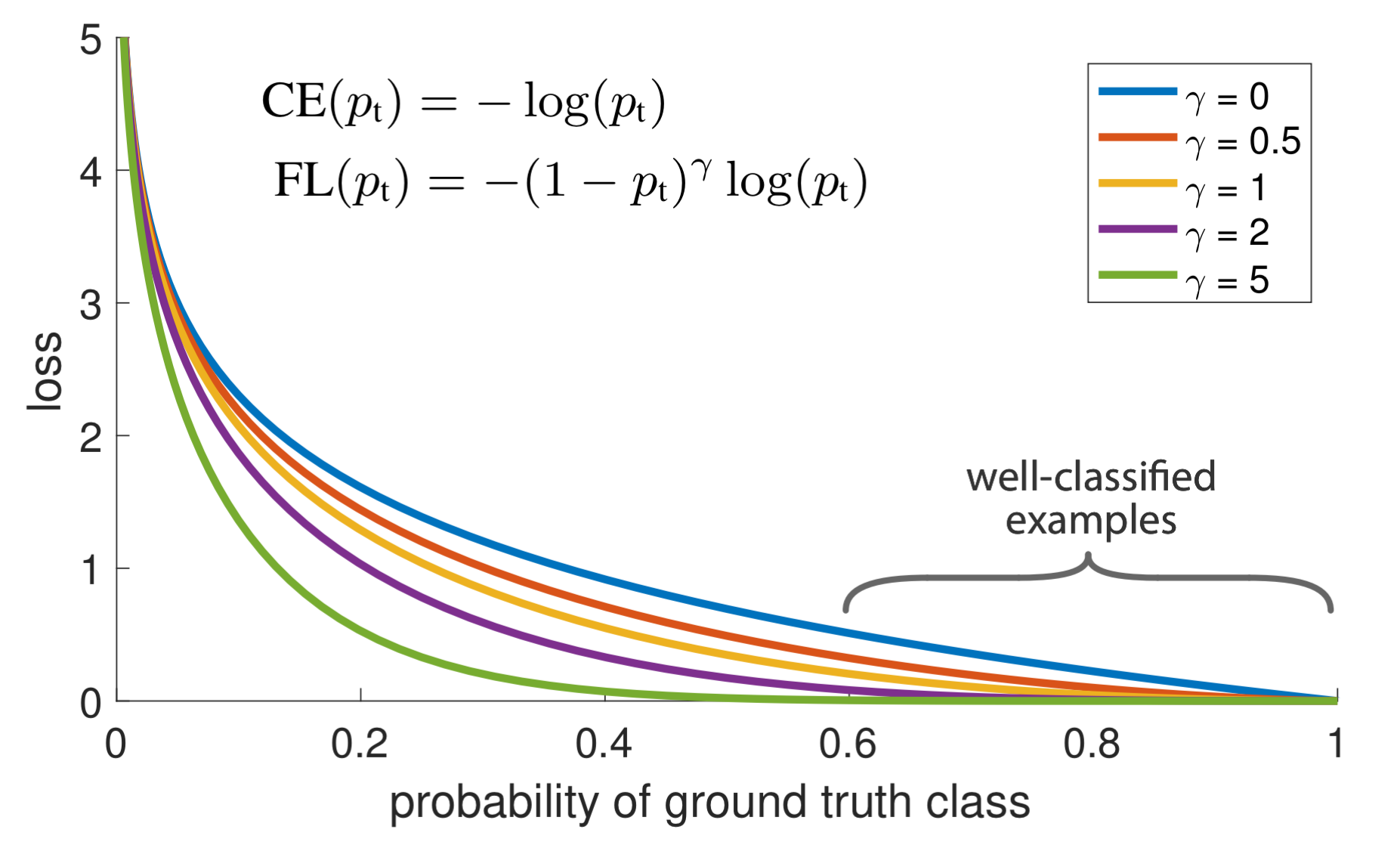

- This paper proposes the Focal Loss to down-weight the loss assigned to well-classified examples, which is a dynamically scaled cross-entropy loss

Method

Binary CE Loss

Starting from the cross-entropy (CE) loss for binary classification

In the above specifies the ground-truth class and is the model's estimated probability for the class with label . For notation convenience, we define as

and rewrite

Balanced Cross Entropy

A common method for addressing class imbalance is to introduce a weighting factor for class and for class . In practice may be set by inverse class frequency or treated as a hyper-parameter to set by cross validation. For notation convenience, we define analogously to how we defined . We write the -balanced CE loss as:

Focal Loss

While balances the importance of positive/negative examples, it does not differentiate between easy/hard examples.

Therefore, we propose to add a modulating factor to the cross entropy loss with tunable focusing parameter . We define the focal loss as

Therefore, we propose to add a modulating factor to the cross entropy loss with tunable focusing parameter . We define the focal loss as

Properties of Focal Loss

- When an example is misclassified and is small, the modulating factor is near and the loss is unaffected. As , the factor goes to and the loss for well-classified examples is down-weighted.

- The focusing parameter smoothly adjusts the rate at which easy examples are down-weighted. When , FL is equivalent to CE, and as is increased the effect of the modulating factor is likewise increased.

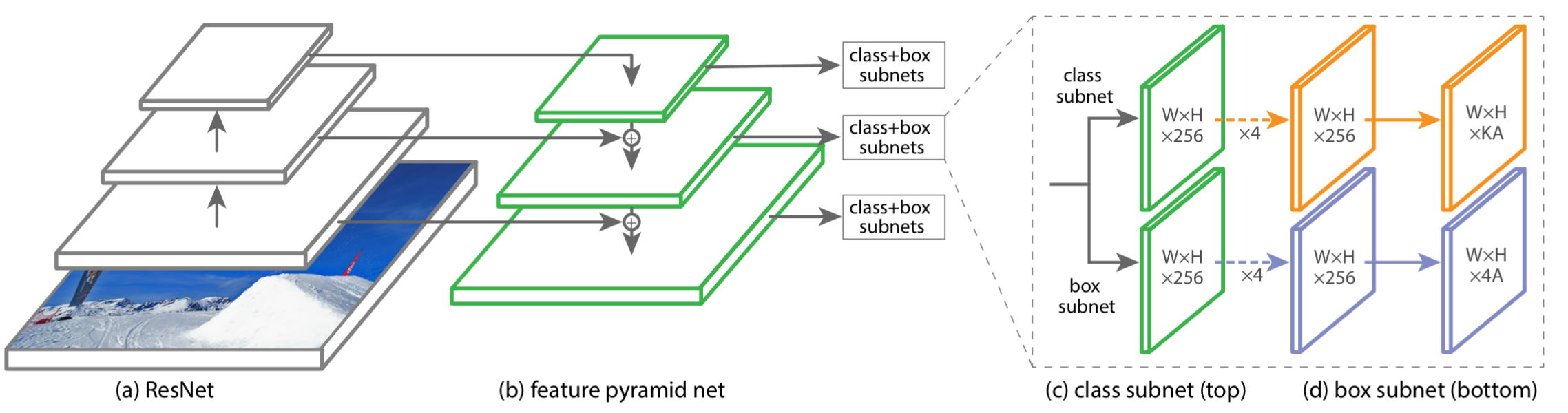

RetinaNet

Combine FPN with Focal Loss

Combine FPN with Focal Loss